BioCombat

BioCombat combines biofeedback art with the idea of gaming. It is a piece with two video projections, two laptops and live electroacoustic sound. It is performed by two performers whose heart rate, galvanic skin response and EEG data are tracked live to influence graphics and sound.

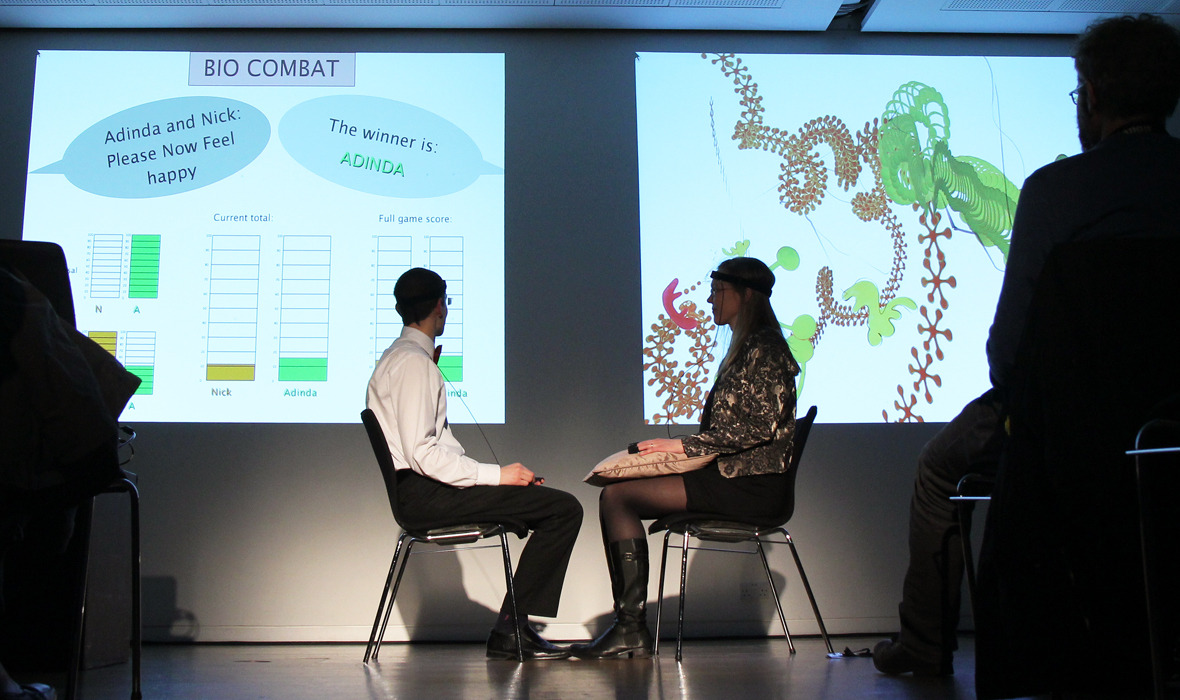

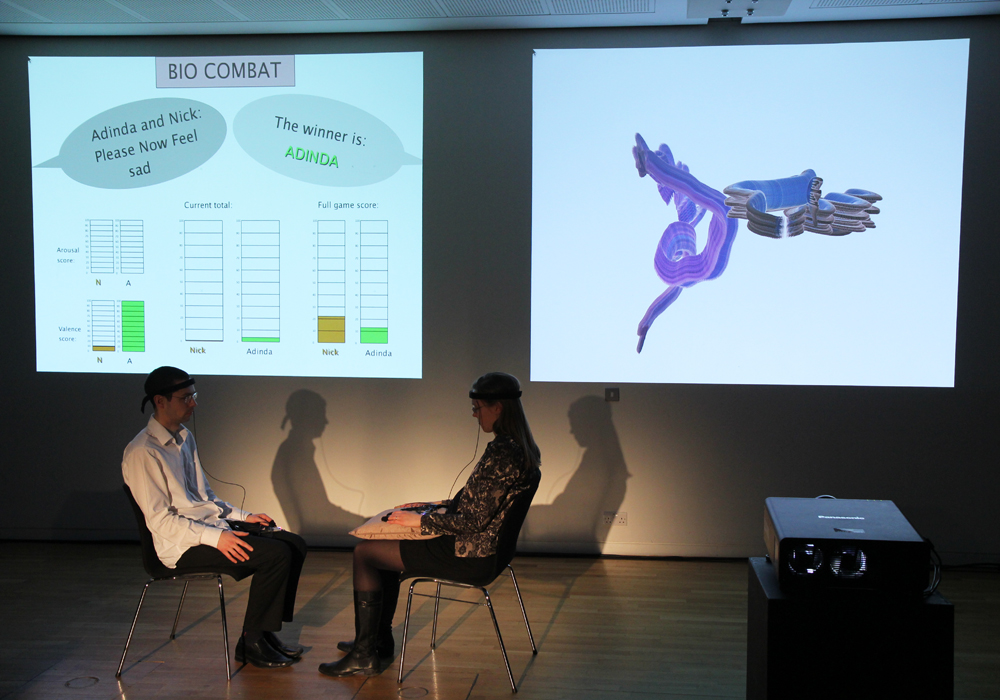

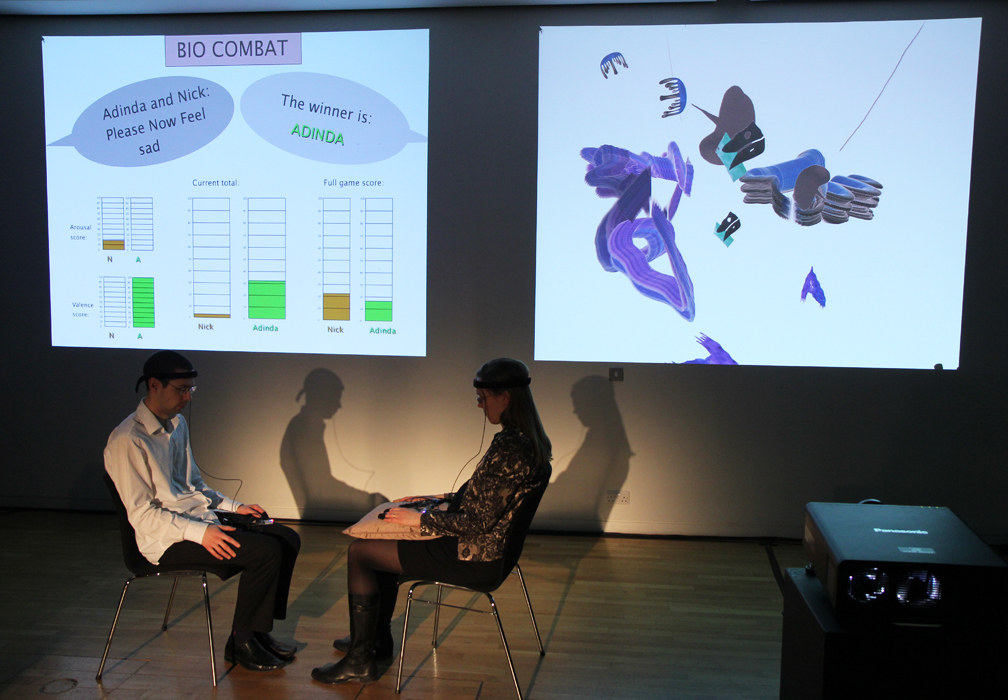

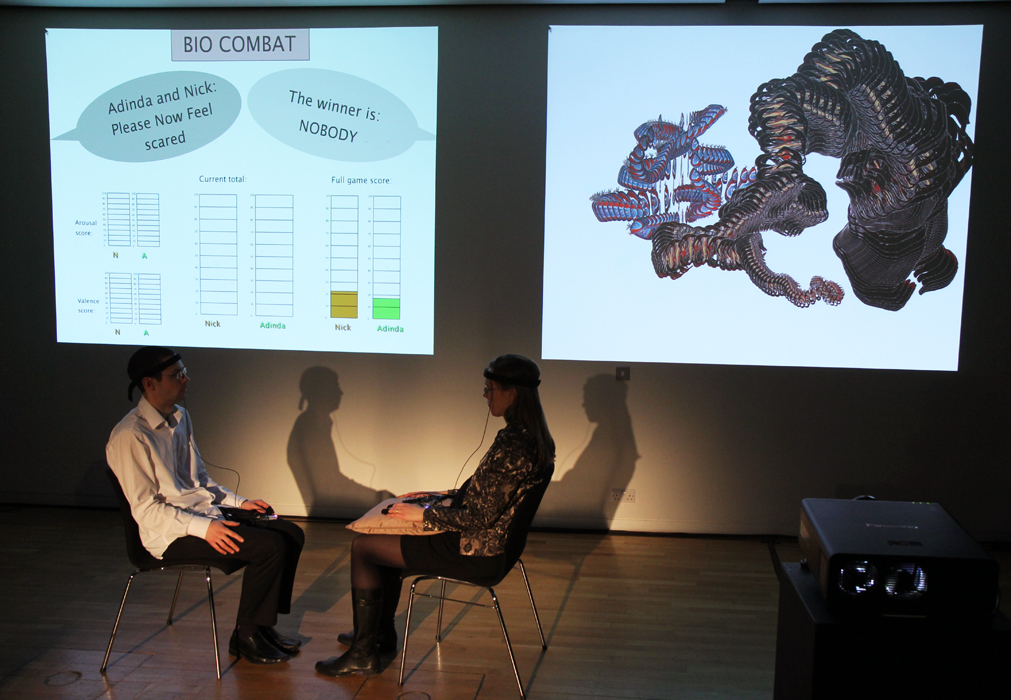

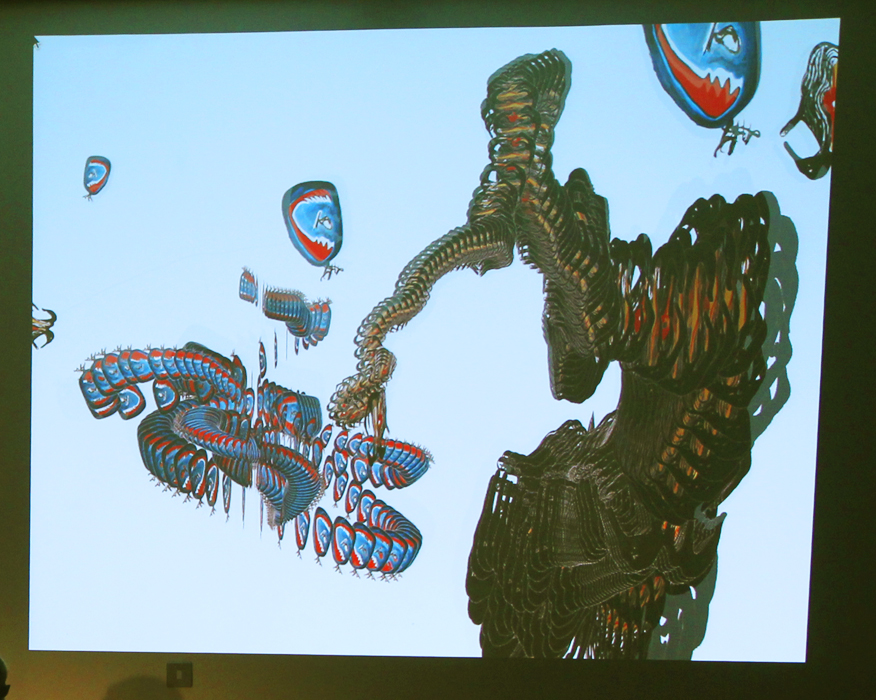

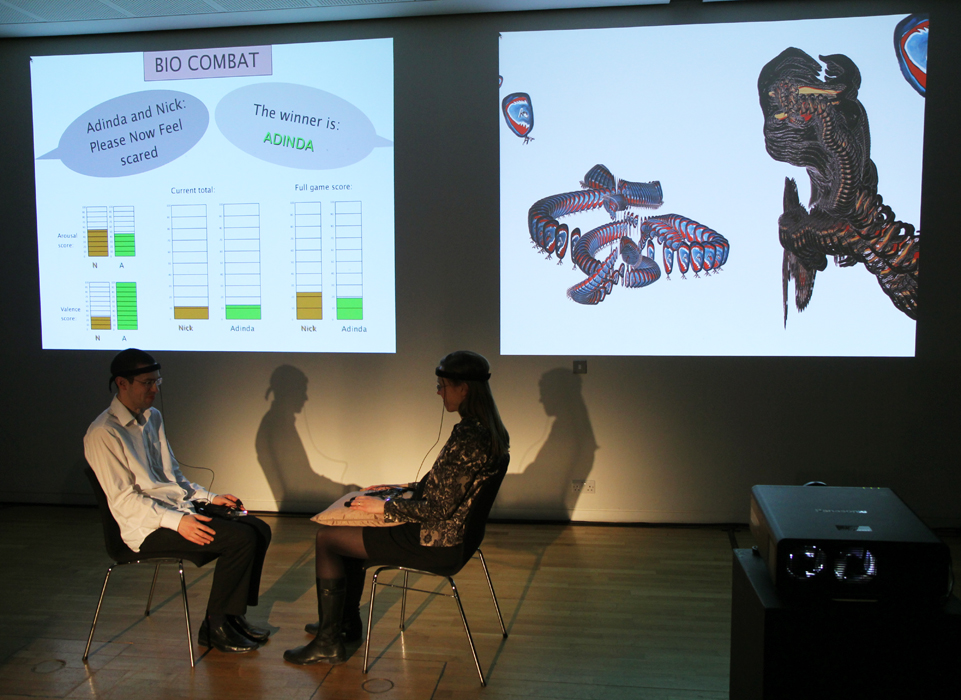

BioCombat is "played" by two performers whose heart rate, galvanic skin response and EEG data are tracked live. The left screen shows the game element of the piece: the computer demands a new emotion to be felt by the performers for each new one-minute interval, and after twenty seconds decides who is best at feeling the requested emotion. Points are scored when the correct arousal and valence are measured. This is then updated live until the next emotion is requested. The winner is rewarded by having their electroacoustic material play louder than their opponents'. The right screen shows animations that are meant to express the target emotion in an abstract way. This projection consists of two animated shapes: the left one uses features of the biosignals of the left performer to directly influence the animations and the right shape does the same for the right performer. Both animations are also using live features in the sound, such as loudness, rhythm, dissonance and frequency distribution in the energy spectrum.

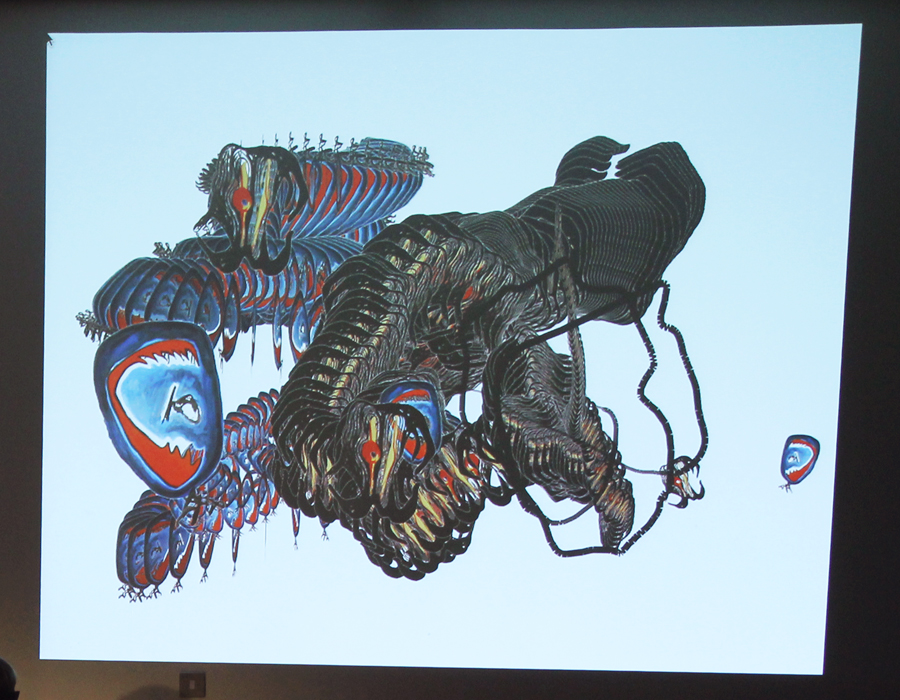

The graphics used are based on drawings by Adinda van 't Klooster. She evaluated the emotional expressiveness of the graphics through an online survey and used the most successful graphics in the animations of BioCombat.

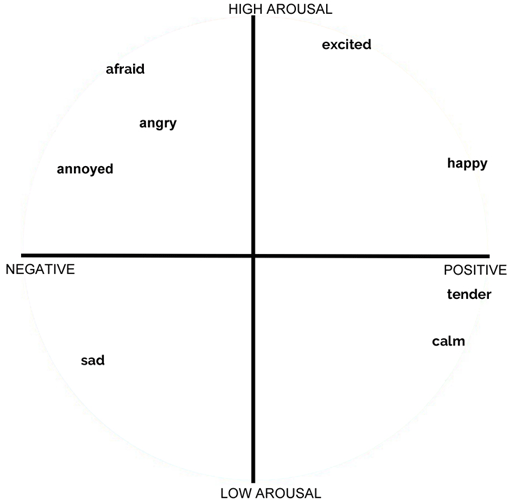

In order to gain 'emotional intelligence' the system has been trained through previous sessions where the performers listened to pieces of music that made them feel either happy, sad, tender, scared, calm, excited, annoyed or angry. Whilst they were listening, their EEG, GSR and heart rate data was recorded. Two-minute data recordings were created for each of the emotions and this was done five times at different points throughout various days. This data was then used to train neural network classifiers for each of the performers. In trial rehearsal sessions, the most successful neural networks for the live situation were selected. It was found after various testing sessions that having seperate neural networks for arousal and for valence worked best. The eight target emotions were placed in Russell's arousal valence circle and when both arousal and valence were in the right quadrant, the performer gained a point. If only arousal or valence was in the right quadrant half a point could be scored.

The placing of the emotions in the arousal valence space is largely based on research by Russell (footnote 1)

Credits

System programming by Nick Collins

Animation and graphics by Adinda van 't Klooster

Electroacoustic composition by Nick Collins and Adinda van 't Klooster

Footnotes

- Russell, J.A. (1980), A Circumplex Model of Affect, Journal of Personality and Social Psychology, 39, p.1161-1178.

The research project needs your help!

Participate