Guest blog by Tuomas Eerola

Observations from the Affect Formations concert, 27 February 2015 at the Concert Hall of the Department of Music at Durham University.

This event was the first of two concerts created by the artist in residence, Adinda van ‘t Klooster. The purpose of the concert was to explore how music influences emotions, how computer might detect emotions in music, and how sonic and visual creations overlap and interact in a live performance. All this was created by combining artwork by Adinda van 't Klooster and compositions and improvisations carried out by Nick Collins (piano), John Snijders (piano), Matthew Warren (composition), and a postgraduate ensemble combining electronic and acoustic sounds.

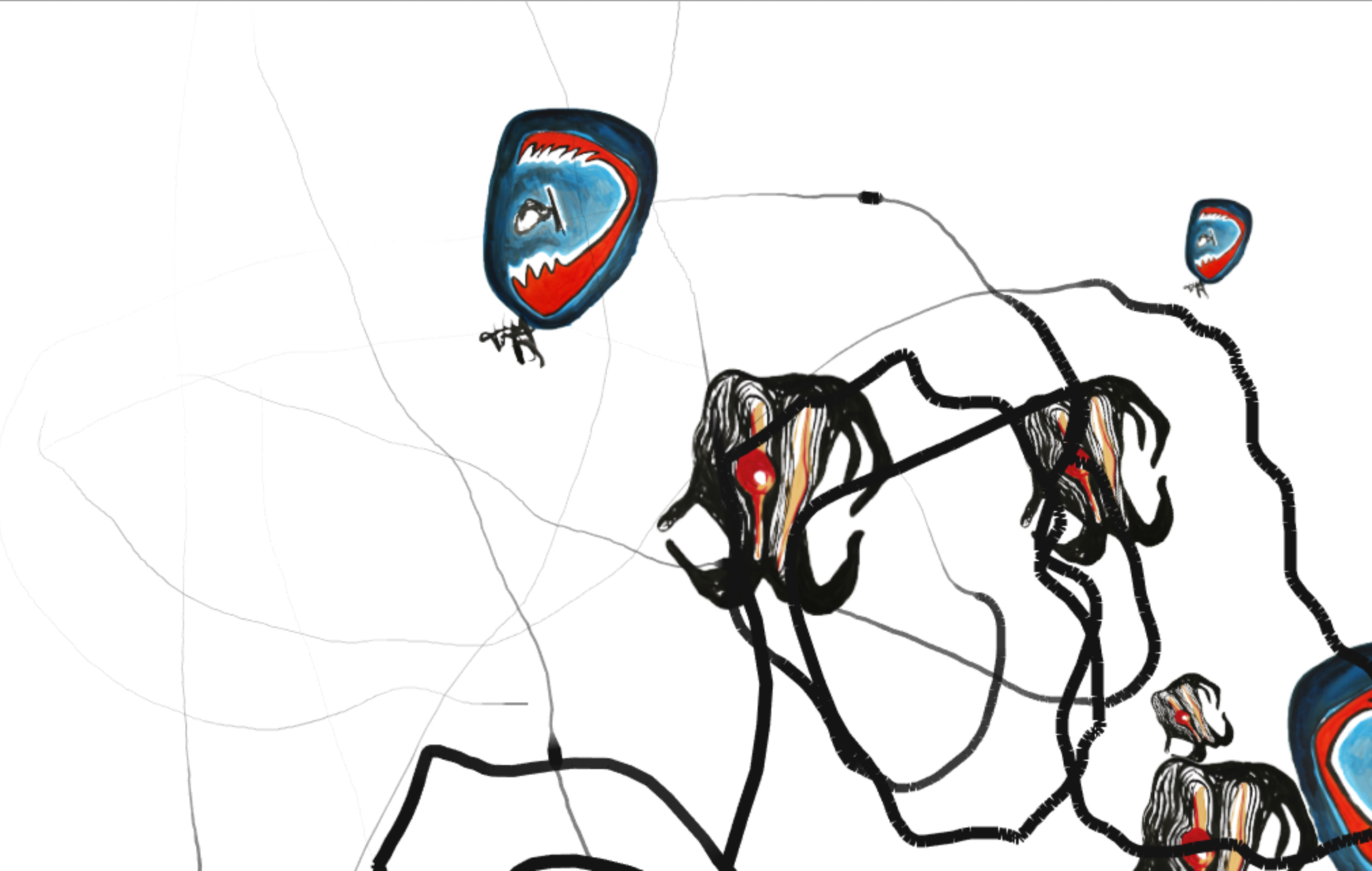

As an audience member, the mesmerising visualisations projected on large screen next to the performers played a major role in my experience of the music. The visual elements in these comprised of Adindas artwork which were transformed by a series of intricate processes (rotation, spinning, floating, zooming) that blended into seamless sequences. Moreover, the animations clearly reacted to performance in a responsive and immediate fashion, which had the effect of the onlooker finding meaningful narratives between the music and the animations. Of course, I know that this was completely algorithm driven process (with clever use of SuperCollider and Processing) but it was cleverly designed to have enough interesting visual elements to keep the viewer in its spell but not to overload. On top of this, a computer also interjected musical commentary to the performance, which created another layer of interpretation to it.

The second theme consisted of creating music out of an artwork. Analogies between colour, shape and sound have always fascinated composers, performers, artists, and scientists. Sir Isaac Newton was obsessed with the parallels between keys and colours, Wolfgang Köhler [footnote1] associated shapes and sounds in a series of experiments, which actually have been replicated and confirmed by neuroscientists [footnote2].

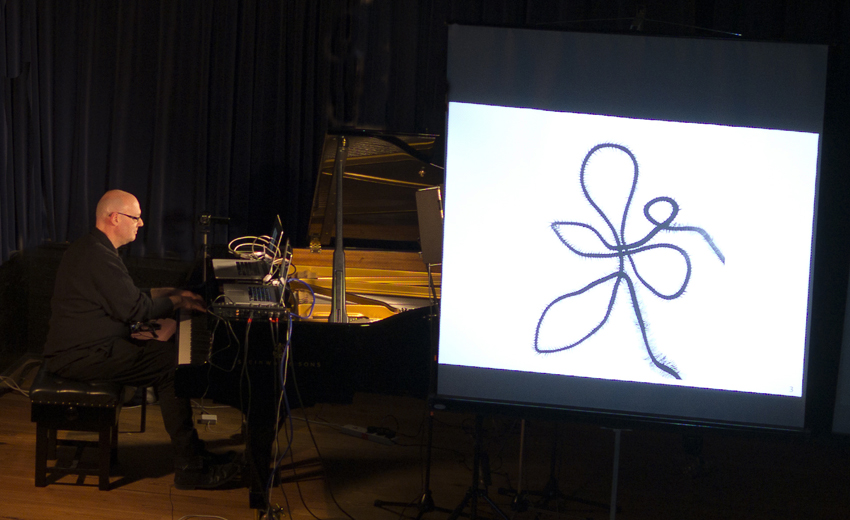

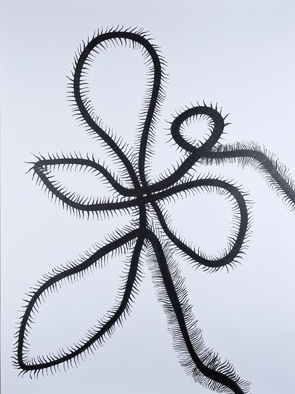

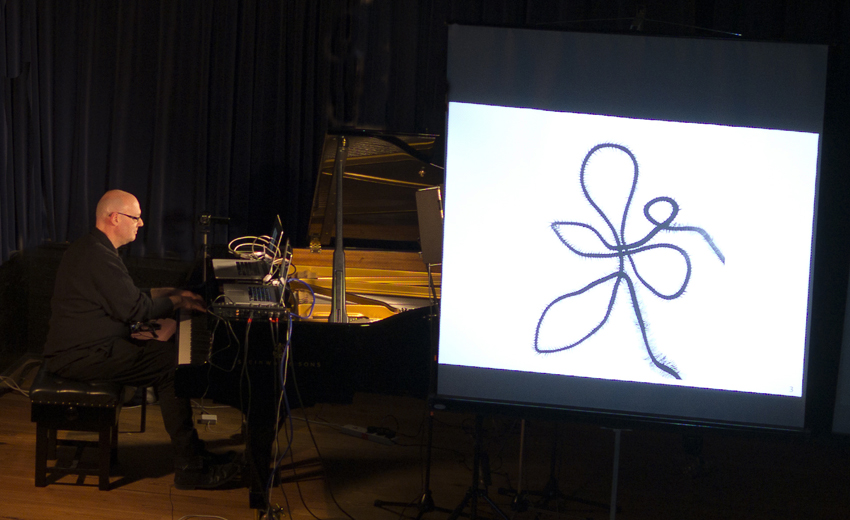

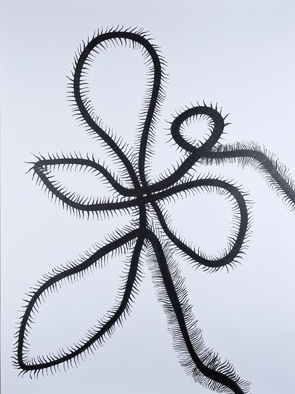

This was musically the most interesting part in my opinion. It was fascinating to immerse oneself into the unfolding creative process where the image actually helped you to navigate the terrain of affects. The graphical scores were images created by Adinda and they were performed by Nick Collins, John Snijders and the ensemble in turns. For each image and improvisation, the logic of how the image transformed into sound was soon discovered. One could easily devise a matching experiment where the listeners had to associate performances to the images [footnote3] and I'm fairly certain that these performances and images would result in a high consensus despite the performances adopting different strategies and devices to bring out the essence of the artworks. This mapping between musical improvisation and visual image also invites the listener to think about the analogies between sonic and visual arts. The interpretation by John Snijders of a Graphical score 3 was a particularly illustrative example of how visual art turns into sonic art.

Left image: John Snijders with Graphical Score 3 projected, Photograph by Aaron Guy

Right image: Graphical Score 3, © Adinda van 't Klooster, 2014

Here, the analogies consisted of:

- colour and timbre (the black visual shape and the low dark sounds)

- visual surface and musical texture (the small sharp "hairs" and fast jagged, edgy rhythmic motives)

- visual shape and musical structure (the looping shape and slow build-up of tonal and textural progressions that twisted and turned before slowly winding down)

In addition to these relatively straightforward analogies, other devices could also be outlined. Knowledge gained in series of recent experiments suggests that such cross-domain mappings are rather uniformly deciphered [^footnote4] and large number of stable mappings does exist, but of course employing a large quantity of similarities does not necessarily lead to more interesting artwork.

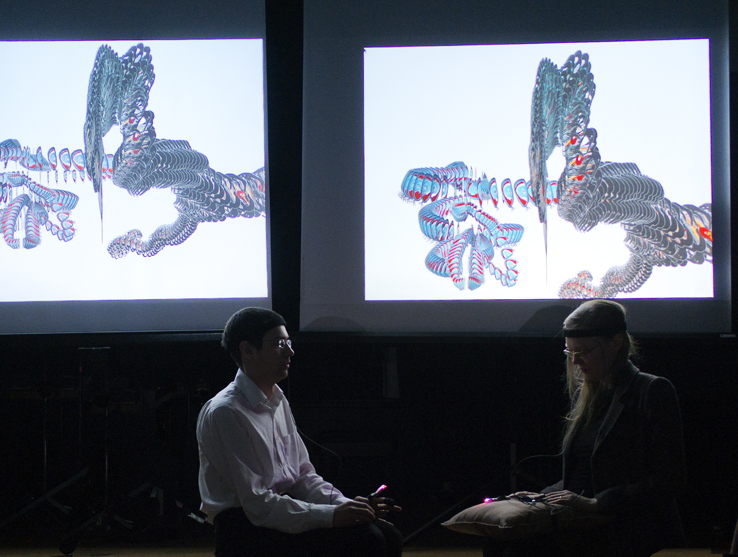

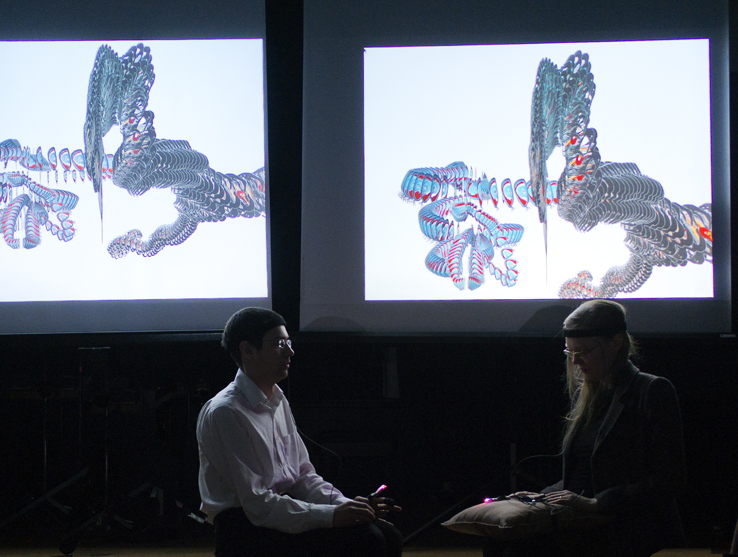

The finale of the concert was called "biocombat", a performance, where Adinda and Nick were rigged up with sensors measuring their arousal (skin conductance and heart rate, also EEG). The computer captured the signals and determined who was the best at feeling the emotion projected by the music at each given time. This is a tough challenge in a live performance situation since the peripheral measures are fairly volatile in general and immensely affected by room temperature, level of anxiousness and arousal. Also the classification of the signals into discrete emotion states such as happy, sad, angry, tender, calm, and excited is not an easy task since many of these states are fairly similar in terms of arousal (which is easier to deduce from these measures than the underlying positive - negative axis of the core affect). The classification is also very much dependent on the amount and variability of the training data. It was a wonderful idea to demonstrate how affective states can be recognised by the computer and also how music may induce certain emotional states.

Image of Bio Combat by Adinda van 't Klooster and Nick Collins, 2015, photograph by Aaron Guy

All in all, an impressive concert from any perspective you may want to look at it. The next opportunity to experience this is at the Sage Gateshead in two weeks, which will probably even be more terrific and affective experience.

Footnotes

[footnote1]: Köhler, W (1929). Gestalt Psychology. New York: Liveright.

[footnote2]: Ramachandran, VS & Hubbard, EM (2001). Synaesthesia: A window into perception, thought and language. Journal of Consciousness Studies, 8(12), 3–34.

[footnote3]: A number of such experiments has been carried out such as Lipscomb and Kim (2004) and Eitan and Rothschild (2010).

[footnote4]: See for instance how musicians associate shapes to music in a series of production experiments Küssner, M. B. (2013). Music and shape. Literary and Linguistic Computing, 28(3), 472-479.

Image © Adinda van 't Klooster, 2015

Image © Adinda van 't Klooster, 2015